What is GPT-4o? A summary of OpenAI’s new multi-modal model

GPT-4 is now fully multi-modal. Everything you need to know about GPT-4o, summary and highlights included.

GPT-4 is now fully multi-modal. This means that the way in which we interact with the model will change forever. What started out as an impressive, but limited, chatbot has blossomed into a full-fledged AI assistant that can translate languages, describe and create images and videos, compose and sing songs, and hold full verbal conversations with users and even other AIs.

Release blog posts for AI models like GPT-4o can be technical and hard to interpret. In this article, we'll provide a concise summary of the new capabilities, performance, and features of GPT-4o, ensuring you receive only the essential information about this latest model.

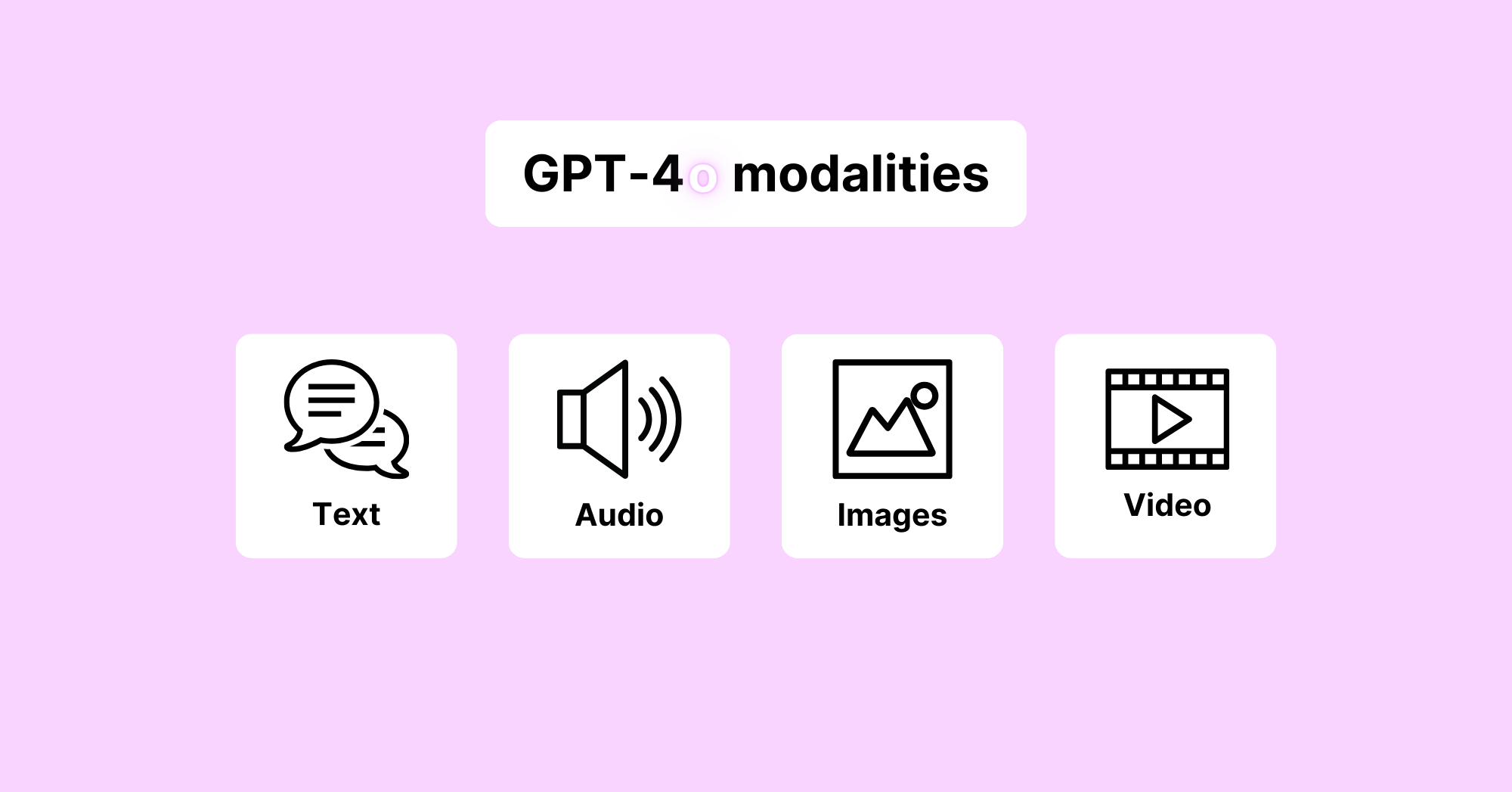

GPT-4o has text, audio, and vision capabilities

The “o” in GPT-4o stands for “omni,” a nod to the model’s new multi-modal capabilities. In the context of AI models, multi-modal refers to the ability of a model to process and generate content in multiple forms of data or "modes." In the case of GPT-4o, these modes are text, audio, images and videos.

Any combination of modes can be used as inputs and requested as outputs for GPT-4o. Here are a few examples:

- Text + Image → Text

- Prompt: Please explain in 3 sentences or less what’s occurring in the photograph attached in this prompt.

- Text + Image → Image

- Prompt: Using the attached clip art image of an apple as a guide, generate a similar clip art image of an orange.

- Text → Image

- Prompt: Generate a photorealistic image of a dog wearing lederhosen.

GPT-4o uses a single neural network to process inputs and generate outputs, representing a departure from previous multi-modal products (like Voice Mode) offered by OpenAI. The single-model design of GPT-4o likely contributes to its increased efficiency, resulting in doubled speed and a 50% reduction in API usage costs.

Here are a few examples of some practical tasks GPT-4o can be used for:

- Acting as a math tutor, listening and responding in a human-like voice to spoken questions.

- Acting as a real-time translator, listening to spoken speech and responding with the correct translation.

- Composing and singing a bespoke song or lullaby.

- Verbally interacting with other AIs (e.g. ask each other questions, sing or harmonize together)

Like what you've read so far?

Become a subscriber and never miss out on free new content.

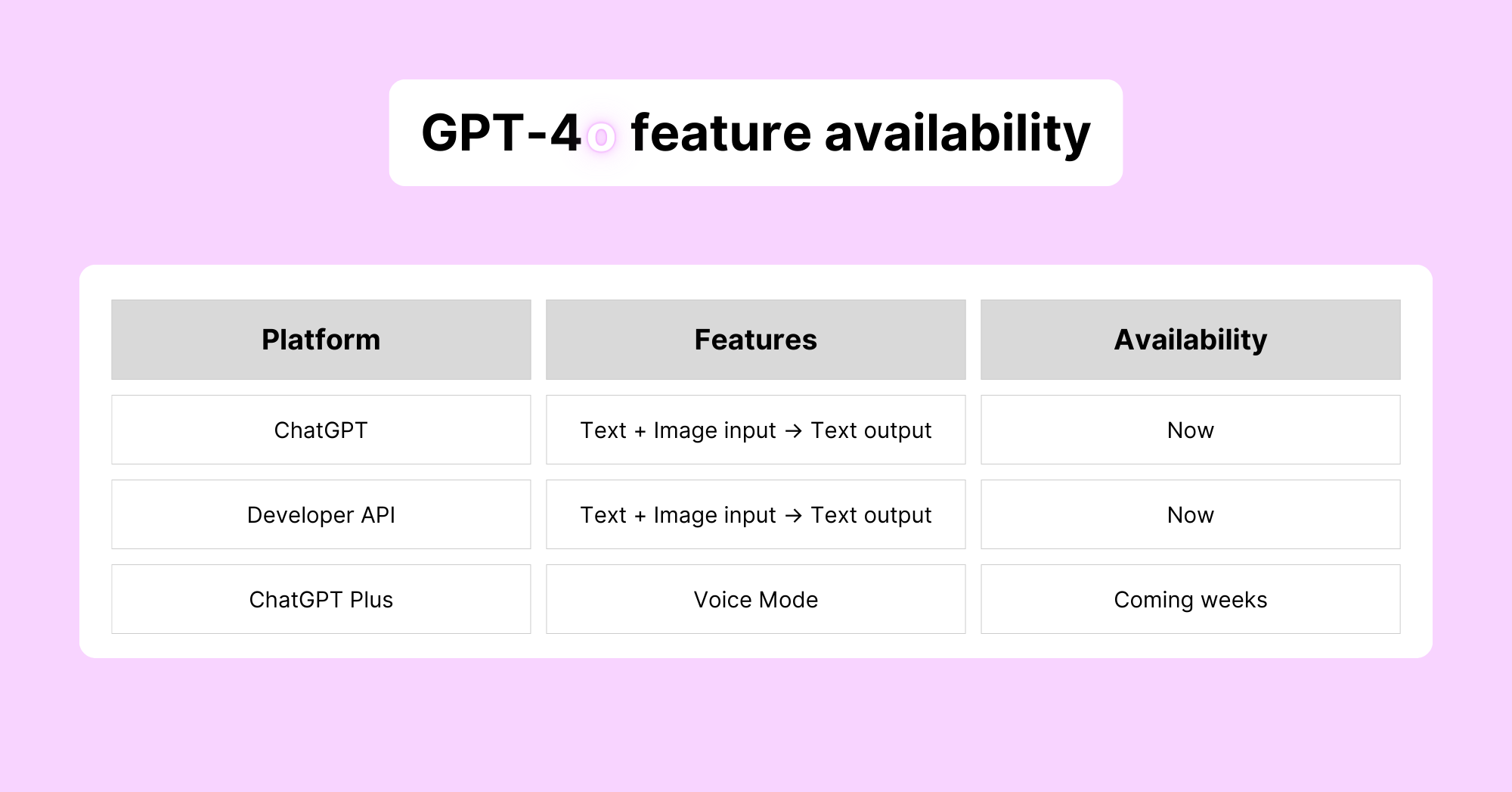

Multi-modal capabilities will be rolled out iteratively

Just because these exciting multi-modal capabilities exist, doesn’t mean we can use them just yet. Unfortunately, OpenAI’s initial release of GPT-4o only supports text and image inputs and text outputs.

In its release blog post, OpenAI cites safety as a primary concern for gradual rollout of audio and video features. Adding new modalities to an already highly accurate and capable model undoubtedly introduces new risks for potential misuse, including fraud and misinformation. As a result, GPT-4o’s audio generation feature will be limited to a selection of preset voices upon its release in a few weeks.

The table below outlines when and to whom various features and functionality will be rolled out.

Interestingly, OpenAI has shared very little about GPT-4o’s video generation capabilities, which it claims will be released to “a small group of trusted partners in the API in the coming weeks.” Although not explicitly stated in the release post, this may be related to concerns regarding misinformation and the upcoming 2024 presidential election, which OpenAI outline in a separate blog post.

GPT-4o outperforms on multi-lingual, audio, and vision tasks

GPT-4o achieves stunning performance on multi-modal tasks. It outperforms OpenAI’s own Whisper-v3, an automatic speech recognition (ASR) model, on both speech recognition and speech translation. In the M3Exam, a benchmark that assesses the multilingual and vision capabilities of AI models to perform tasks they haven’t been specifically trained on, GPT-4o outperforms GPT-4 across all tasks. Additionally, GPT-4o outperforms Gemini 1.0 Ultra, Gemini 1.5 Pro, and Claude Opus on seven vision understanding metrics, demonstrating SOTA performance.

According to OpenAI, GPT-4o matches GPT-4 Turbo performance on text, reasoning, and coding tasks, indicating that most changes in training methods and model architecture for GPT-4o were focused on audio, image and video modalities as opposed to text. This is rather surprising, given that Google’s recently released Gemini models boast comparable abilities in natural language but significantly larger context windows, allowing them to consume much longer texts in their prompts. In light of this, it will be interesting to see when and how OpenAI releases their next groundbreaking language model.

Conclusion

In conclusion, GPT-4o is a truly multi-modal extension of GPT-4 Turbo, accepting text, audio, and images as inputs and generating them as outputs, in any combination. GPT-4o outperforms other SOTA multi-modal models on audio and vision tasks but doesn’t demonstrate any marked improvement on text and natural language tasks. Furthermore, OpenAI is rolling out these multi-modal capabilities iteratively, starting with text and vision capabilities.

For more information about GPT-4o, including its impressive multilingual capabilities, check out the original release blog post.

📝 Foundation models in AI: An introduction for beginners (GPTech)

📝 What are Multimodal models? (Towards Data Science)

Sources

- Mahmood, O. (2023, October 16). What are Multimodal models? Towards Data Science.

- OpenAI. (2024, May 13). Hello GPT-4o. OpenAI.

- OpenAI. (2024, January 15). How OpenAI is approaching 2024 worldwide elections. OpenAI.

- OpenAI. (2022, September 21). Introducing Whisper. OpenAI.

- Zhang, W., Aljunied, S. M., Gao, C., Chia, Y. K., Bing, L.(2023, November 10). M3Exam: A Multilingual, Multimodal, Multilevel Benchmark for Examining Large Language Models. arXiv.

- Google DeepMind. (2023, December 6). Welcome to the Gemini era. Google DeepMind.

- Anthropic. (2024, March 4). Introducing the next generation of Claude. Anthropic.